介紹

首先介紹什麼是 p5.js,

p5.js 是基於 Processing 在瀏覽器中提供友善的畫布 (canvas) 使用介面。(https://creativecoding.in/2020/04/24/p5-js-%E5%BF%AB%E9%80%9F%E4%B8%8A%E6%89%8B/)

p5.js 與 ml5 的共同點是它們都可以透過瀏覽器使用 GPU 及 GPU 的專屬記憶體。

備料

接著備料,

- 在 hello-ml5 裡新增兩個檔案,一檔名為 face_p5.html,另一檔名為 face_nop5.html。

- 在 face_p5.html 與 face_nop5.html 分別輸入以下程式碼。

face_p5.html 的程式碼如下—

<html>

<head>

<meta charset="UTF-8">

<title>FaceApi Landmarks Demo With p5.js</title>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/p5.min.js"></script>

<script src="https://cdnjs.cloudflare.com/ajax/libs/p5.js/0.9.0/addons/p5.dom.min.js"></script>

<script src="https://unpkg.com/ml5@latest/dist/ml5.min.js"></script>

</head>

<body>

<h1>FaceApi Landmarks Demo With p5.js</h1>

<script src="sketch_face_p5.js"></script>

</body>

</html>

face_nop5.html 的程式碼如下—

<html>

<head>

<meta charset="UTF-8">

<title>FaceApi Landmarks Demo With no p5.js</title>

<script src="https://unpkg.com/ml5@latest/dist/ml5.min.js" type="text/javascript"></script>

</head>

<body>

<h1>FaceApi Landmarks Demo With no p5.js</h1>

<script src="sketch_face_nop5.js"></script>

</body>

</html>

- 在 hello-ml5 裡新增兩個檔案,一檔名為 sketch_face_p5.js,另一檔名為 sketch_face_nop5.js,

且分別輸入以下程式碼。

sketch_face_p5.js 的程式碼如下—

let faceapi;

let video;

let detections;

const detection_options = {

withLandmarks: true,

withDescriptors: false

}

let width = 360;

let height = 280;

function setup() {

createCanvas(width, height);

// load up your video

video = createCapture(VIDEO);

video.size(width, height);

video.hide(); // Hide the video element, and just show the canvas

faceapi = ml5.faceApi(video, detection_options, modelReady);

}

function modelReady() {

console.log('ready!');

faceapi.detect(gotResults);

}

function gotResults(err, result) {

if (err) {

console.log(err);

return;

}

// console.log(result)

detections = result;

image(video, 0, 0, width, height);

if (detections) {

if (detections.length > 0) {

drawLandmarks(detections);

}

}

faceapi.detect(gotResults);

}

function drawLandmarks(detections) {

stroke(161, 95, 251);

for (let i = 0; i < detections.length; i++) {

const alignedRect = detections[i].alignedRect;

const x = alignedRect._box._x;

const y = alignedRect._box._y;

const boxWidth = alignedRect._box._width;

const boxHeight = alignedRect._box._height;

rect(x, y, boxWidth, boxHeight);

const mouth = detections[i].parts.mouth;

const nose = detections[i].parts.nose;

const leftEye = detections[i].parts.leftEye;

const rightEye = detections[i].parts.rightEye;

const rightEyeBrow = detections[i].parts.rightEyeBrow;

const leftEyeBrow = detections[i].parts.leftEyeBrow;

drawPart(mouth, true);

drawPart(nose, false);

drawPart(leftEye, true);

drawPart(leftEyeBrow, false);

drawPart(rightEye, true);

drawPart(rightEyeBrow, false);

}

}

function drawPart(feature, closed) {

beginShape();

for (let i = 0; i < feature.length; i++) {

const x = feature[i]._x;

const y = feature[i]._y;

vertex(x, y);

}

if (closed === true) {

endShape(CLOSE);

} else {

endShape();

}

}

sketch_face_nop5.js 的程式碼如下—

let faceapi;

let video;

let detections;

const detection_options = {

withLandmarks: true,

withDescriptors: false

}

let width = 360;

let height = 280;

window.addEventListener('DOMContentLoaded', function () {

setup();

});

async function setup() {

createCanvas(width, height);

// load up your video

video = await getVideo();

faceapi = ml5.faceApi(video, detection_options, modelReady);

}

let canvas, ctx;

function createCanvas(w, h) {

canvas = document.createElement("canvas");

canvas.width = w;

canvas.height = h;

document.body.appendChild(canvas);

ctx = canvas.getContext('2d');

}

async function getVideo() {

const videoElement = document.createElement('video');

videoElement.width = width;

videoElement.height = height;

videoElement.setAttribute("style", "display: none;"); // Hide the video element, and just show the canvas

document.body.appendChild(videoElement);

// Create a webcam capture

const capture = await navigator.mediaDevices.getUserMedia({ video: true });

videoElement.srcObject = capture;

videoElement.play();

return videoElement;

}

function modelReady() {

console.log('ready!');

faceapi.detect(gotResults);

}

function gotResults(err, result) {

if (err) {

console.log(err);

return;

}

// console.log(result)

detections = result;

ctx.drawImage(video, 0, 0, width, height);

if (detections) {

if (detections.length > 0) {

drawLandmarks(detections);

}

}

faceapi.detect(gotResults);

}

function drawLandmarks(detections) {

ctx.strokeStyle = "#a15ffb";

for (let i = 0; i < detections.length; i++) {

const alignedRect = detections[i].alignedRect;

const x = alignedRect._box._x;

const y = alignedRect._box._y;

const boxWidth = alignedRect._box._width;

const boxHeight = alignedRect._box._height;

ctx.beginPath();

ctx.rect(x, y, boxWidth, boxHeight);

ctx.fillStyle = "white";

ctx.fill();

const mouth = detections[i].parts.mouth;

const nose = detections[i].parts.nose;

const leftEye = detections[i].parts.leftEye;

const rightEye = detections[i].parts.rightEye;

const rightEyeBrow = detections[i].parts.rightEyeBrow;

const leftEyeBrow = detections[i].parts.leftEyeBrow;

drawPart(mouth, true);

drawPart(nose, false);

drawPart(leftEye, true);

drawPart(leftEyeBrow, false);

drawPart(rightEye, true);

drawPart(rightEyeBrow, false);

}

}

function drawPart(feature, closed) {

ctx.beginPath();

for (let i = 0; i < feature.length; i++) {

const x = feature[i]._x;

const y = feature[i]._y;

if (i === 0) {

ctx.moveTo(x, y);

} else {

ctx.lineTo(x, y);

}

}

if (closed === true) {

ctx.closePath();

}

ctx.stroke();

}

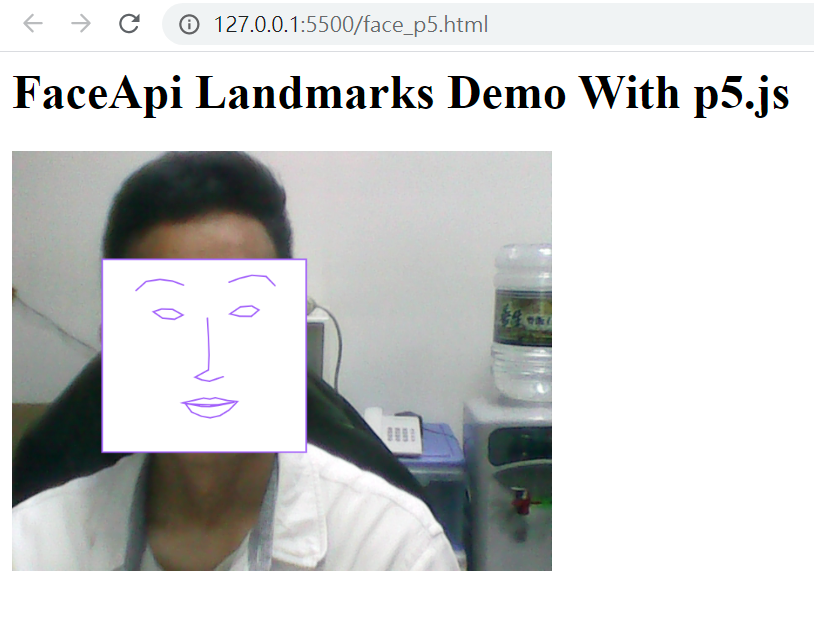

執行

備料完成後,就可啟動 Live Server,

在 VS Code 裡的 face_p5.html 程式碼按右鍵,在顯示的內容選單裡,點選 Open with Live Server,

就可顯示如下畫面。(會先跳出詢問是否讓此網頁有使用網路攝影機的權限,請按同意)

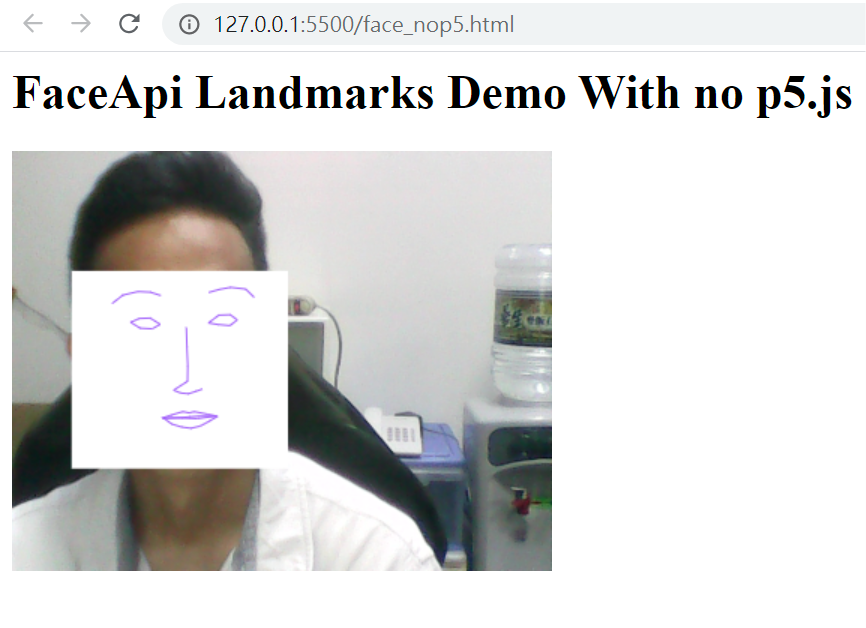

face_nop5.html 同上的步驟啟動,

就可顯示如下畫面。

可見兩者的執行結果是一樣的。

比較

使用 WinMerge 比較 sketch_face_p5.js 與 sketch_face_nop5.js 的差異,

就可以了解 p5.js 能做的, javascript 原本就能做到,

但 p5.js 真的把程式碼變得更簡潔,在這個例子幾乎減少了四分之一的程式碼。

所以, p5.js 及 ml5 都是讓程式設計師在使用新技術時,可以更專注在

客製化的需求。

資料來源: https://ithelp.ithome.com.tw/articles/10253993

沒有留言:

張貼留言